Overview

This page provides an overview of the models and methods implemented in regenie. A full description is given in our paper.

regenie carries out genome-wide association tests for both quantitative and binary (case-control) phenotypes. Starting at regenie v4.0, it also supports survival analysis for time-to-event data (See Survival analysis section below). It is designed to handle

- A large number of samples. For example, it is ideally suited to the UK Biobank dataset with 500,000 samples.

- A combination of genetic data from a micro-array, imputation and exome sequencing.

- A large number of either quantitative traits (QTs), binary (case-control) traits (BTs), or time-to-event traits (TTEs)

- Accounting for a set of covariates

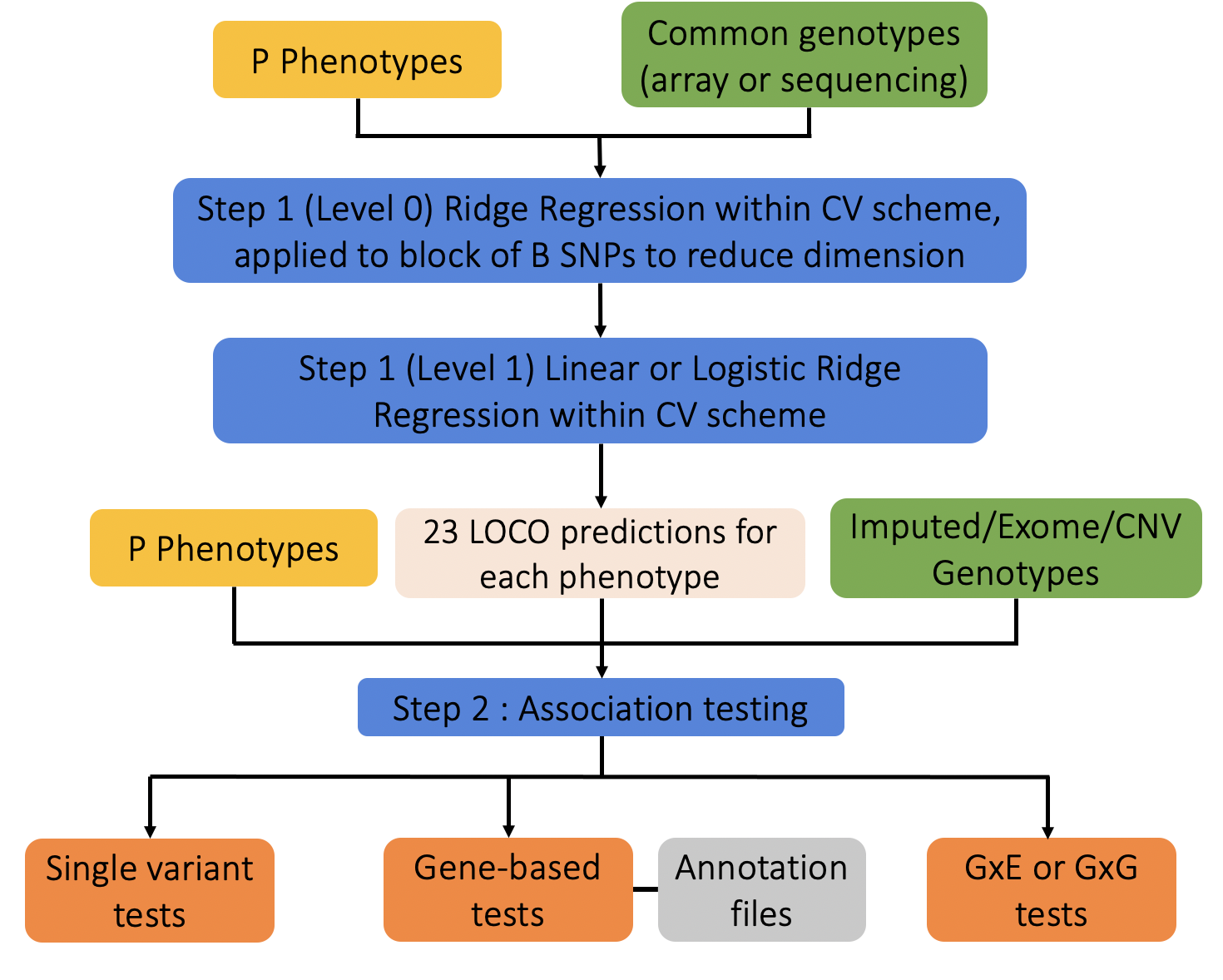

An overview of the regenie method is provided in the figure below. Essentially, regenie is run in 2 steps:

- In the first step a subset of genetic markers are used to fit a whole genome regression model that captures a good fraction of the phenotype variance attributable to genetic effects.

- In the second step, a larger set of genetic markers (e.g. imputed markers) are tested for association with the phenotype conditional upon the prediction from the regression model in Step 1, using a leave one chromosome out (LOCO) scheme, that avoids proximal contamination.

Step 1 : Whole genome model

In Step 1 a whole genome regression model is fit at a subset of the total set of available genetic markers. These are typically a set of several hundred thousand () common markers from a micro-array.

Ridge regression (level 0)

regenie reads in the markers in blocks of consecutive markers (--bsize option).

In each block, a set of ridge regression

predictors are calculated for a small range of shrinkage

parameters (using --l0 option [default is 5]) .

For a block of SNPs in a matrix and phenotype vector we calculate predictors

where

The idea behind using a range of shrinkage values is to capture the unknown number and size of truly associated genetic markers within each window. The ridge regression takes account of Linkage disequilibrium (LD) within each block.

These predictors are stored in place of the genetic markers in matrix , providing a large reduction in data size. For example, if and and shrinkage parameters are used, then the reduced dataset will have predictors.

Ridge regression is used in this step for both quantitative and binary traits.

Cross-validation (level 1)

The predictors generated by the ridge regression step will all be positively correlated with the phenotype. Thus, it is important to account for that correlation when building a whole genome wide regression model.

When analyzing a quantitative trait we use a second level of ridge regression on the full set of predictors in . This approach is inspired by the method of stacked regressions1.

We fit the ridge regression for a range of shrinkage parameters (--l1 option) and choose a single

best value using K-fold cross validation scheme. This assesses the

predictive performance of the model using held out sets of data, and aims to control

any over-fitting induced by using the first level of ridge regression

to derive the predictors.

In other words, we fit the model

where is estimated as and the parameter is chosen via K-fold cross-validation.

For binary traits, we use a logistic ridge regression model to combine the predictors in

where is the probability of being a case and captures the effects of non-genetic covariates.

Genetic predictors and LOCO

Once has been estimated we can construct the genetic prediction

Also, since each column of the matrix will be associated with a chromosome we can can also construct a genetic prediction ignoring any one chromosome, by simply ignoring those columns when calculating the prediction. This is known as the Leave One Chromosome Out (LOCO) approach. These LOCO predictions are valuable at Step 2 of regenie when each marker is tested for associated (see below).

For binary traits, it is the linear predictor in a logistic regression model using LOCO that is saved, and used as an offset when fitting logistic regression models to test for association.

Multiple phenotypes

The dimension reduction step using ridge regression can be used very efficiently to model multiple phenotypes at once. The ridge regression equations for a block of SNPs in a matrix and a single phenotype in a matrix take the form

where does not depend on

If instead phenotypes are stored in columns of a matrix , then the matrix can be applied jointly to calculate the matrix of estimates , and this can take advantage of parallel linear algebra implementations in the Eigen matrix library.

Covariates

Covariates, such as age and sex and batch effect variables can be included in the regenie model.

For quantitative traits, any covariates are regressed out of phenotypes and genotypes before fitting the model.

For binary traits, we fit a null model with only covariates, and use predictions from that model as an offset when fitting the logistic regression model.

Step 2 : Single-variant association testing

In Step 2, a larger set of markers are tested for association with the trait (or traits). As with Step 1, these markers are also read in blocks of markers, and tested for association. This avoids having to have all markers stored in memory at once.

Quantitative traits

For quantitative traits, we use a linear regression model for association testing.

- Covariates are regressed out of the phenotypes and genetic markers.

- The LOCO predictions from Step 1 are removed from the phenotypes.

- Linear regression is then used to test association of the residualized phenotype and the genetic marker.

- Parallel linear algebra operations in the Eigen library are used where possible.

Binary traits

For binary traits, logistic regression score test is used to test association of the phenotype and the genetic marker.

The logistic regression model includes the LOCO predictions from Step 1 as an offset. Covariates are included in the linear predictor in the usual way.

When the case-control ratio is imbalanced, standard association tests don't control Type I error well at rare genetic markers. regenie has two options to handle this

Firth logistic regression

Standard maximum likelihood estimates are generally biased. The Firth correction2 removes much of the bias, and results in better calibrated test statistics. The correction involves adding a penalty term to the log-likelihood,

where the penalty term corresponds to the use of Jeffrey's Prior. This prior has the effect of shrinking the effect size towards zero.

regenie uses a Firth correction when the p-value from the standard

logistic regression test is below a threshold (default 0.05).

It also includes a novel, accurate and fast approximate Firth correction which

is ~60x faster than the exact Firth correction

(see the option --firth).

The p-value reported in regenie is based on a likelihood ratio test (LRT), and we use the Hessian of the log-likelihood without the penalty term to estimate the standard error (SE).

This may cause an issue in meta-analyses with rare variants, as the effect size estimate and SE may not match with the LRT p-value.

Hence, we added an option --firth-se to report a SE computed instead from the effect size estimate and the LRT p-value.

Saddle point approxiation (SPA) test

The SPA test approximates the null distribution of the test statistic by approximating the cumulant generating function of the test statistic, which involves all of the higher order moments3$^,$4. This provides a better estimation of the tail probabilities compared to using standard asymptotic theory which relies on the normal approximation and uses only the first two moments of the dsitribution. A tail probability is obtained as

and is the cumulant generating function of the test statistic and

is obtained by using a root-finding algorithm for . As this approximation

has been found not to work very well for ultra-rare variants, a minimum minor

allele count (MAC) is used to filter out these variants before testing (option --minMAC).

Step 2 : Gene-based testing

Instead of performing single-variant association tests, multiple variants can be aggregated in a given region, such as a gene, using the following model

where 's represent the single variants included in the test, 's and 's are weights and effect sizes, respectively, for each variant, and is a link function for the phenotypic mean . We also denote by the score statistics obtained from the single-variant tests. This can be especially helpful when testing rare variants as single-variant tests usually have lower power performance.

To avoid inflation in the gene-based tests due to rare variants as well as reduce computation time, we have implemented the collapsing approach

proposed in SAIGE-GENE+5, where ultra-rare variants are aggregated into a mask.

For highly imbalanced binary traits, SPA/Firth correction can be used to calibrate the test statistics in the

gene-based tests as proposed in Zhao et al. (2020)6 using --firth/--spa.

Burden tests

Burden tests, as defined in Lee et al. (2014)7, assume , where is a fixed coefficient, which then leads to the test statistic These tests collapse variants into a single variable which is then tested for association with the phenotype. Hence, they are more powerful when variants have effects in the same direction and of similar magnitude. In regenie, multiple options are available to aggregate variants together into a burden mask beyond the linear combination above (see here). For example, the burden tests that were employed in Backman et al. (2021)8 use the default strategy in regenie of collapsing variants by taking the maximum number of rare alleles across the sites.

Variance component tests

Unlike burden tests, SKAT9 assume the effect sizes $\beta_i$ come from an arbitrary distribution with mean 0 and variance $\tau^2$ which leads to the test statistic Hence, SKAT can remain powerful when variant effects are in opposite directions.

The omnibus test SKATO10 combines the SKAT and burden tests as So setting $\rho=0$ corresponds to SKAT and $\rho=1$ to the burden test. In practice, the parameter $\rho$ is chosen to maximize the power [regenie uses a default grid of 8 values {$0, 0.1^2, 0.2^2, 0.3^2, 0.4^2, 0.5^2, 0.5, 1$} and set the weights $w_i = Beta(MAF_i,1,25)$].

To obtain the p-value from a linear combination of chi-squared variables, regenie uses Davies' exact method11 by default. Following Wu et al (2016)12, regenie uses Kuonen's saddlepoint approximation method13 when the Davies' p-value is below 1e-5 and if that fails, it uses Davies' method with more stringent convergence parameters (lim=1e5,acc=1e-9).

The original SKATO method uses numerical integration when maximizing power across the various SKATO models that use different values for $\rho$. We also implement a modification of SKATO, named SKATO-ACAT, which instead uses the Cauchy combination method14 to combine the p-values from the different SKATO models.

Cauchy combination tests

The ACATV15 test uses the Cauchy combination method ACAT to combine single variant p-values $p_i$ as where we set $\widetilde{w}_i = w_i \sqrt{MAF(1-MAF)}$. This test is highly computationally tractable and is robust to correlation between the single variant tests.

The omnibus test ACATO15 combines ACATV with the SKAT and burden tests as

where unlike the original ACATO test, we only use one set of the weights $w_i$. Alternatively, we augment the test to include an extended set of SKATO models beyond SKAT and Burden (which correspond to $\rho$ of 0 and 1 in SKATO respectively) and use the default SKATO grid of 8 values for $\rho$.

Sparse Burden Association Test

regenie can generate burden masks which are obtained by aggregating single variants using various annotation classes as well as allele frequency thresholds. The Sparse Burden Association Test (SBAT)16 combines these burden masks in a joint model imposing constraints of same direction of effects where $M_i$ represent a burden mask and we solve

The SBAT method tests the hypothesis $H_0: \gamma_i=0$ for all $i$ vs. $H_1: \gamma_i > 0$ for some $i$. By using this joint model, the SBAT test accounts for the correlation structure between the burden masks and with the non-negative constraints, it can lead to boost in power performance when multiple burden masks are causal and have concordant effects. This test has the nice property that it combines model selection of the masks (via the sparsity induced by the non-negative assumption) with model inference (it is well calibrated and powerful).

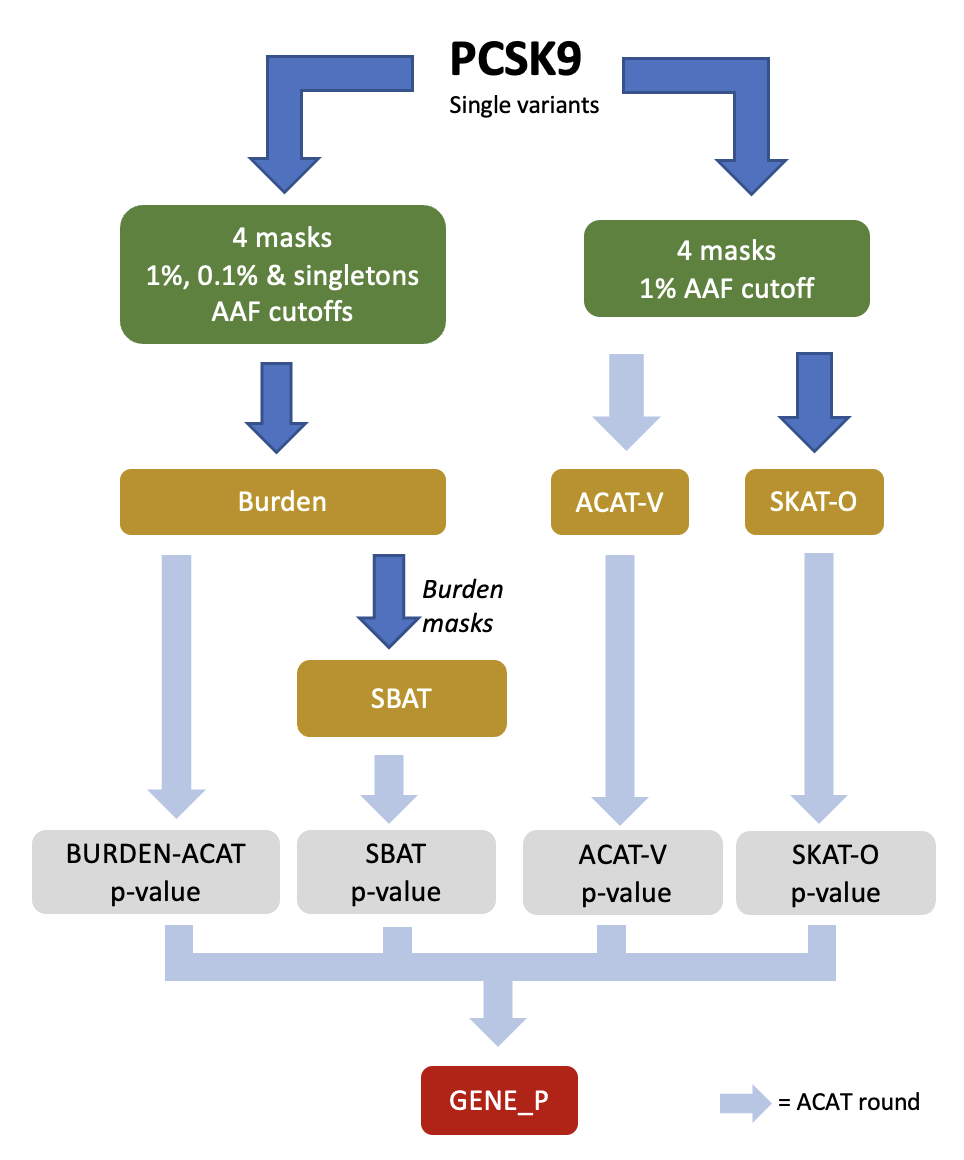

GENE_P

As the different gene-based tests in REGENIE can be more powerful under different genetic architectures, we propose a unified strategy, named GENE_P, that combines the strengths of these tests. It uses ACAT to combine the p-values of the SKATO, ACATV, Burden and SBAT tests and obtain an overall assessment of significance for a genetic region (e.g. gene). The diagram below illustrates the GENE_P test using 4 masks (i.e. combination of variant annotations) and 3 allele frequency cutoffs when performing gene-based tests.

Step 2 : Interaction testing

The GxE tests are of the form where $E$ is an environmental risk factor and $G$ is a marker of interest, and $\odot$ represents the Haddamard (entry-wise) product of the two. The last term in the model allows for the variant to have different effects across values of the risk factor. Note: if $E$ is categorical, we use a dummy variable for each level of $E$ in the model above.

We can look at the following hypotheses:

- $H_0: \beta = 0$ given $\gamma = 0$, which is a marginal test for the SNP

- $H_0: \beta = 0$, which is a test for the main effect of the SNP in the full model

- $H_0: \gamma = 0$, which is a test for interaction

- $H_0: \beta = \gamma = 0$, which tests both main and interaction effects for the SNP

Misspecification of the model above, such as in the presence of heteroskedasticity, or the presence of high case-control imbalance can lead to inflation in the tests. Robust (sandwich) standard error (SE) estimators17 can be used to adress model misspecification however, they can suffer from inflation when testing rare variants or in the presence of high case-control imbalance18$^,$19.

In regenie, we use a hybrid approach which combines:

- Wald test with sandwich estimators

- Wald test with heteroskedastic linear models (for quantitative traits)

- LRT with penalized Firth regression (for binary traits)

For quantitative traits,

we use the sandwich estimators HC3 to perform a Wald test for variants whose minor allele count (MAC) is above 1000 (see --rare-mac).

For the remaining variants, we fit a heteroskedastic linear model (HLM)20

where we assume $\epsilon \sim N(0, D)$ where $D$ is a diagonal matrix with entries $\sigma^2\exp{(1 + E\theta_1 + E^2\theta_2)}$. This formulation allows for the phenotypic variance to also depend on the risk factor $E$. By incorporating both the linear and quadratic effect of $E$ in the mean and variance of $Y$, this model provides robustness to heteroskedasticity (Note: the $E^2$ terms are only added when $E$ is quantitative). Wald tests are then performed for the null hypotheses listed above.

For binary traits, we consider the following interaction model

where we also include a non-linear effect for $E$ (not if categorical).

The sandwich estimator HC3 is used in a Wald test for variants whose MAC is above 1000 (see --rare-mac) otherwise the model-based standard errors are used.

When Firth is specified, we only apply the Firth correction using LRT if the p-value for the interaction term $\gamma$ from the Wald test is below a specified threshold (see --pThresh). So the added $E^2$ term as well as the use of the Firth penalty

help with case-control imbalance and model misspecification for the effect of $E$ on the phenotype.

Survival analysis

Starting with regenie v4.0, we have enabled survival analysis, improving the power for analyzing common diseases where time-to-event data is available by leveraging the Cox Proportional Harzard model. We assume that samples without an event are right-censored, i.e. the survival time is only known to be greater than a certain value. It is important to encode this information correctly into the phenotypes.

Step 1: Whole genome model using cox ridge regression

In step 1, Level 0 is run using linear ridge regression with the time variable taken as the response. In Level 1, instead of linear/logistic ridge regression, we use Cox Ridge regression21 to combine the predictions $W$ from Level 0.

where $\lambda_0(t)$ is the baseline hazard function, and, for the $i$-th individual, $\lambda_i(t)$ is the hazard function, $w_i$ is the set of ridge predictors from Level 0, and $\mu_i$ captures the effects of non-genetic covariates.

We fit the cox ridge regression for a range of shrinkage parameters and select the best value using a K-fold cross validation scheme.

With the estimated $\hat{\alpha}$, we construct LOCO predictions which capture population structure, relatedness and polygenicity.

Step 2: Single variant and gene-based burden tests

For time-to-event traits, the cox proportional hazard regression model is used to test the association between the phenotype and the genetic marker. Note: the only supported gene-based test is the burden test.

The cox proportional hazard regression model includes the LOCO predictions from Step 1 as an offset.

We test the null hypothesis $H_0: \beta = 0$ using a score test. When the event rate is low, the standard score test doesn't control Type I error well at rare genetic markers. To reduce the bias and achieve a more robust test, regenie uses Firth correction22 when the p-value from the standard score test is below a threshold (default 0.05).

The firth correction provides a well-calibrated test, but comes with a computational cost. To mitigate this burden in Cox regression, we include a fast approximate test, which gives results very similar to the exact Firth test.

Missing Phenotype data

With QTs, missing values are mean-imputed in Step 1 and they are

dropped when testing each phenotype in Step 2 (unless using --force-impute).

With BTs, missing values are mean-imputed in Step 1 when fitting the level 0 linear ridge regression and they are dropped when fitting the level 1 logistic ridge regression for each trait. In Step 2, missing values are dropped when testing each trait.

To remove all samples that have missing values at any of the

phenotypes from the analysis, use option --strict in step 1 and 2.

This can also be used when analyzing a single trait to only keep individuals with

complete data by setting the phenotype values of individuals to remove to NA.

Note: imputation is only applied to phenotypes; covariates are not allowed to have missing data.

References

-

Breiman, L. Stacked regressions. Machine learning 24, 49–64 (1996). ↩

-

Firth, D. Bias reduction of maximum likelihood estimates. Biometrika 80, 27–38 (1993). ↩

-

Butler, R. W. Saddlepoint approximations with applications. (Cambridge University Press, 2007). ↩

-

Dey, R., Schmidt, E. M., Abecasis, G. R. & Lee, S. A fast and accurate algorithm to test for binary phenotypes and its application to PheWAS. The American Journal of Human Genetics 101, 37–49 (2017). ↩

-

Zhou, W. et al. Set-based rare variant association tests for biobank scale sequencing data sets. medRxiv (2021). ↩

-

Zhao, Z. et al. UK biobank whole-exome sequence binary phenome analysis with robust region-based rare-variant test. Am J Hum Genet 106, 3–12 (2020). ↩

-

Lee, S., Abecasis, G. R., Boehnke, M. & Lin, X. Rare-variant association analysis: Study designs and statistical tests. Am J Hum Genet 95, 5–23 (2014). ↩

-

Backman, J. D. et al. Exome sequencing and analysis of 454,787 UK biobank participants. Nature 599, 628–634 (2021). ↩

-

Wu, M. C. et al. Rare-variant association testing for sequencing data with the sequence kernel association test. Am J Hum Genet 89, 82–93 (2011). ↩

-

Lee, S., Wu, M. C. & Lin, X. Optimal tests for rare variant effects in sequencing association studies. Biostatistics 13, 762–75 (2012). ↩

-

Davies, R. B. Algorithm AS 155: The distribution of a linear combination of χ 2 random variables. Applied Statistics 29, 323–333 (1980). ↩

-

Wu, B., Guan, W. & Pankow, J. S. On efficient and accurate calculation of significance p-values for sequence kernel association testing of variant set. Ann Hum Genet 80, 123–35 (2016). ↩

-

Kuonen, D. Miscellanea. Saddlepoint approximations for distributions of quadratic forms in normal variables. Biometrika 86, 929–935 (1999). ↩

-

Liu, Y. & Xie, J. Cauchy combination test: A powerful test with analytic p-value calculation under arbitrary dependency structures. J Am Stat Assoc 115, 393–402 (2020). ↩

-

Liu, Y. et al. ACAT: A fast and powerful p value combination method for rare-variant analysis in sequencing studies. Am J Hum Genet 104, 410–421 (2019). ↩↩

-

Ziyatdinov, A. et al. Joint testing of rare variant burden scores using non-negative least squares. The American Journal of Human Genetics 111, 2139–2149 (2024). ↩

-

MacKinnon, J. G. & White, H. Some heteroskedasticity-consistent covariance matrix estimators with improved finite sample properties. Journal of Econometrics 29, 305–325 (1985). ↩

-

Tchetgen Tchetgen, E. J. & Kraft, P. On the robustness of tests of genetic associations incorporating gene-environment interaction when the environmental exposure is misspecified. Epidemiology 22, 257–61 (2011). ↩

-

Voorman, A., Lumley, T., McKnight, B. & Rice, K. Behavior of QQ-plots and genomic control in studies of gene-environment interaction. PLoS One 6, (2011). ↩

-

Young, A. I., Wauthier, F. L. & Donnelly, P. Identifying loci affecting trait variability and detecting interactions in genome-wide association studies. Nat Genet 50, 1608–1614 (2018). ↩

-

Simon, N., Friedman, J. H., Hastie, T. & Tibshirani, R. Regularization paths for cox’s proportional hazards model via coordinate descent. Journal of statistical software 39, 1–13 (2011). ↩

-

Heinze, G. & Schemper, M. A solution to the problem of monotone likelihood in cox regression. Biometrics 57, 114–119 (2001). ↩